But if your dataset is large enough, you can play with different k (maybe tune it by cross-validation) and estimate the breakpoint precisely enough. This approach does not allow you to estimate the breakpoint exactly. Although they are not very close, the fitted curves are: Numbers 0.57 and 0.825 correspond to 0.5 and 1.25 in the true DGP. With this method, one line is fit to all.

Piecewise regression code#

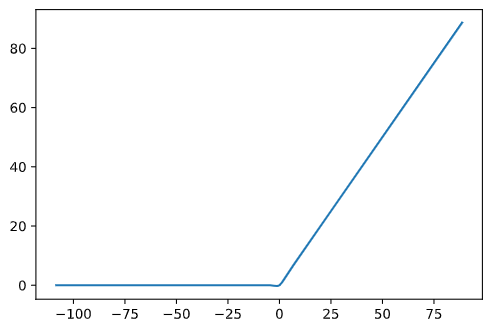

This code will return a vector of estimated coefficients to you: ĭue to Lasso approach, it is sparse: the model found exactly one breakpoint among 10 possible. Introduction Segmental regression is also commonly referred to as piecewise regression or segmented regression. Plt.title('fitting segmented regression') Plt.plot(x, model.predict(basis), color='k') Thresholds = np.percentile(x, np.linspace(0, 1, k+2)*100)īasis = np.hstack(, np.maximum(0, np.column_stack(*k)-thresholds)]) iterations 500 x np.random.normal (0, 1, iterations) 10 y np.where (x < 0, -4 x + 3, np.where (x < 10, x + 48, x + 98)) + np.random.normal (0, 3, iterations) plt. Y = y_expected + np.random.normal(size=x.size, scale=0.5) So lets start with two different datasets that we know are good candidates for piecewise regression. If you are unsatisfied with discontinuous model and want continuous seting, I would propose to look for your curve in a basis of k L-shaped curves, using Lasso for sparsity: import numpy as np In model (3.3) y is a vector of length n, n is the total number of measurements, e is a vector with random independent. That solution fits discontinuous regression.

There is a blog post with a recursive implementation of piecewise regression. Piecewise polynomials, even those continuous at the knots, tend not to be smooth: they rapidly change the slope at the knots. Estimating regression models with unknown breakpoints. bad fits when you have more unknowns than data fit with a breakpoint guess get the linear regression matrix. Plt.plot( *SegmentedLinearReg( X, Y, initialBreakpoints ), '-r' ) Ysolution = a*ones + b*Xsolution + np.sum( Rk, axis=0 ) Xsolution = np.insert( np.append( breakpoints, max(X) ), 0, min(X) ) If np.max(np.abs(newBreakpoints - breakpoints)) < dt/5: Here is an implementation in python: import numpy as npĭef SegmentedLinearReg( X, Y, breakpoints ):īreakpoints = np.sort( np.array(breakpoints) ) This is the method used in the R Segmented package. In particular, the convergence or the result may depends on the first estimation of the breakpoints. "the process is iterated until possible convergence, which is not, in From the values of the jumps, the next breakpoint positions are deduced, until there are no more discontinuity (jumps). Hashes for piecewise-regression-1.3.0.tar.

The positions of the breakpoints are iteratively estimated by performing, for each iteration, a segmented linear regression allowing jumps at the breakpoints.

It works for a specified number of segments, and for a continuous function. Muggeo is relatively simple and efficient.

0 kommentar(er)

0 kommentar(er)